Industry analysts have placed AI agents right at the peak of the technology Hype Cycle, with concerns emerging about inflated expectations and a forecast that almost 40% of agentic projects will get scrapped - but they are not a passing trend.

An agent is simply a software entity that observes its environment, reasons on that observation and acts upon the environment.

Ten years ago, that "reasoning" step might have been just some hard-coded software rules. The game-changer today is that agents now have the powerful brain of Large Language Models (LLMs) to handle the reasoning part. This is what is bringing agents to a whole new level.

The adoption rate is staggering. The 2025 Stack Overflow Developer Survey shows that 84% of respondents are using or planning to use AI tools in their development process, an increase from 76% the previous year. At this rate, we'll soon be at a point where nearly every developer is using AI tools. This is a massive time saver and something that companies shouldn't be underestimating.

Web scraping is still hard for agents

But, while AI agents have proven to be a massive boon for general software development, many are asking if that impact translates directly to the world of web scraping. The simple answer, right now, is “no”.

Web scraping presents a set of unique and difficult challenges that push current AI agents to their limits. The complex, dynamic, and intricate nature of the web makes it a struggle.

Here are some of the core problems:

Long HTML

The sheer size of modern web pages is a major hurdle. With many modern web pages containing hundreds of thousands of tokens of HTML, and some exceeding a million, target HTML content often exceeds the context window of even the most powerful LLMs. An agent can't reason about what it can't see.

Access restrictions

What do you do when you want to scrape something? You open a browser, go to the page, and—bam—you get a CAPTCHA. For an agent, that's game over. Overcoming bans, CAPTCHAs, and geo-limitations is a fundamental requirement that stumps many autonomous systems.

Long "Trial and Error" Loops

Without a clear path, agents can get stuck in long, repetitive loops of trying something, failing, and trying again. This is not only inefficient but also quickly exhausts the agent’s limited context, causing it to lose track of its original goal.

Adaptability

Websites change constantly. One day, your spider works perfectly; the next, a small front-end update has broken all your selectors. We humans can adapt to these changes almost instantly, but an agent needs a robust monitoring and adaptation mechanism to handle this dynamism.

Reproducibility

With non-deterministic elements like A/B tests, client-side rendering, and lazy-loading, the same URL can present different HTML on subsequent requests. This lack of consistency makes it incredibly difficult for an agent to create a reliable, reproducible scraping process.

Scalability

It's one thing to use an agent to help write a single spider. But how do you scale that to write 1,000 custom spiders? An interactive, one-at-a-time process doesn't scale.

Human-in-the-Loop

Given the issues above, some human oversight is often necessary. But how often? Finding the right balance between automation and manual intervention is a non-trivial trade-off between flexibility and efficiency.

Safety

How do you ensure an agent doesn't do something it's not supposed to? The risk of prompt injection attacks and other security vulnerabilities is a serious concern when granting an agent autonomous capabilities.

Helping agents scrape: Zyte’s research and experience

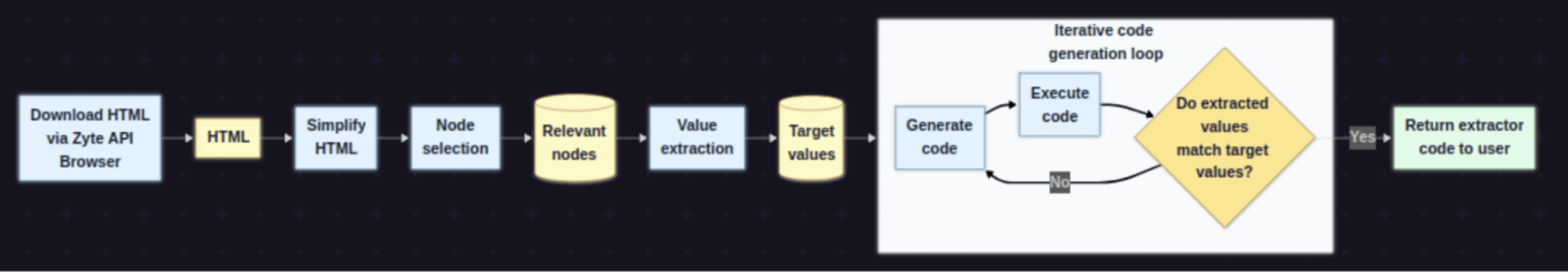

At Zyte, we've been tackling these challenges head-on. In late 2024, we started by creating our own "mini-agent" to automatically write data extraction code. The workflow went like this:

This API was used internally by our developers, who found it would save significant time. But, with standalone agents becoming more powerful, we asked ourselves: “Is this ‘mini-agent’ app still worth it? Couldn’t we just ask a powerful agent like GitHub Copilot to do the whole of the scraping writing?”

We tried it and found that even the most powerful agent with such big instruction files is not able to even come close to what real end-to-end fully autonomous scraping should look like. For example, the agent would often ignore the specific data schema format we provided.

This led us to a better approach: delegation.

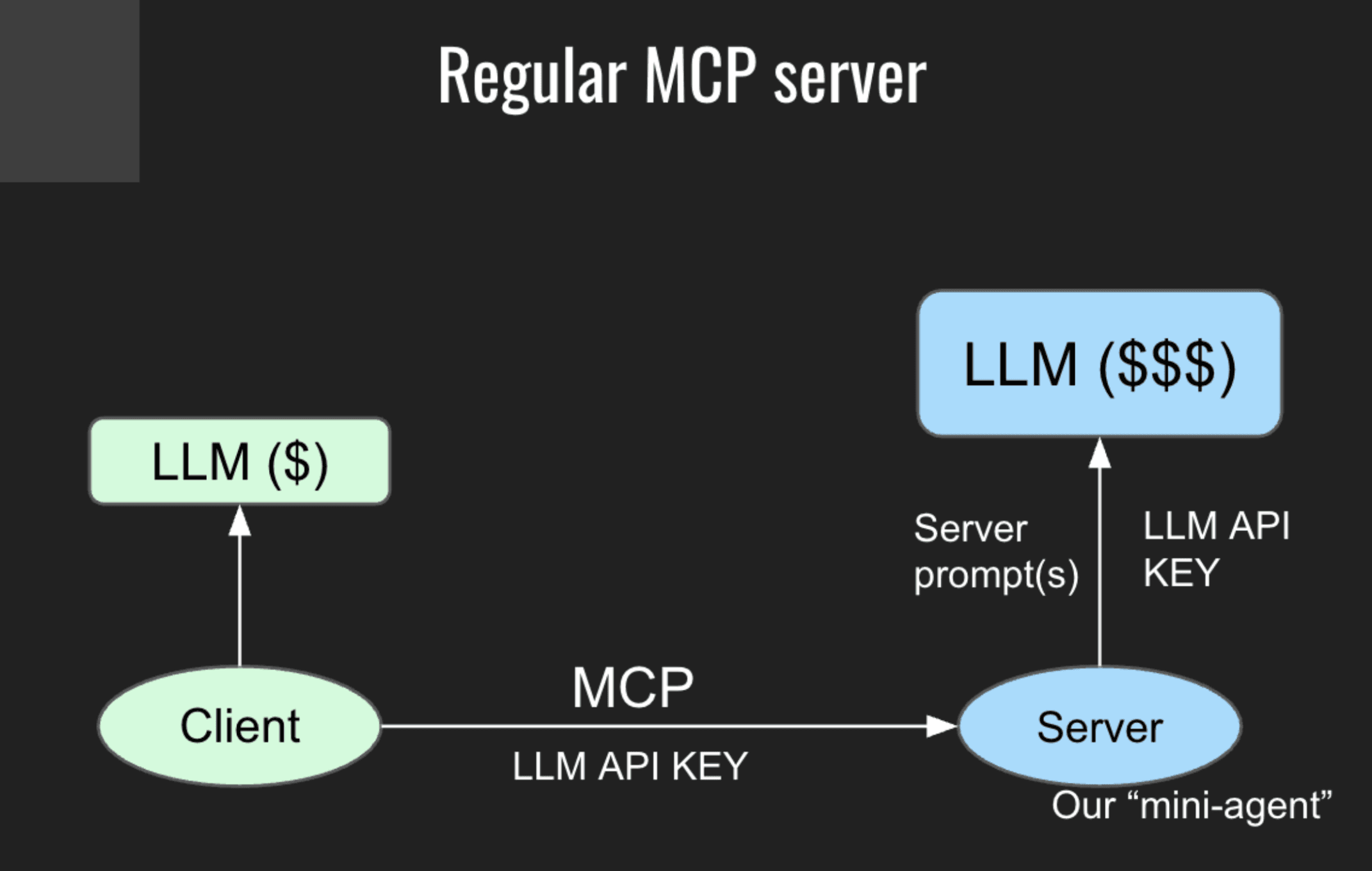

All of these issues are solved if we allow an agent through GitHub Copilot to delegate the hard, specific parts of the task—like code generation—to our standalone "mini-agent".

But then the question was: “How do we make that tool available to the main agent?” Putting it behind an API and requiring users to sign up and use another key felt clunky. We wanted the code editor to have direct access to the code as it was being written.

MCP sampling with local efficiency

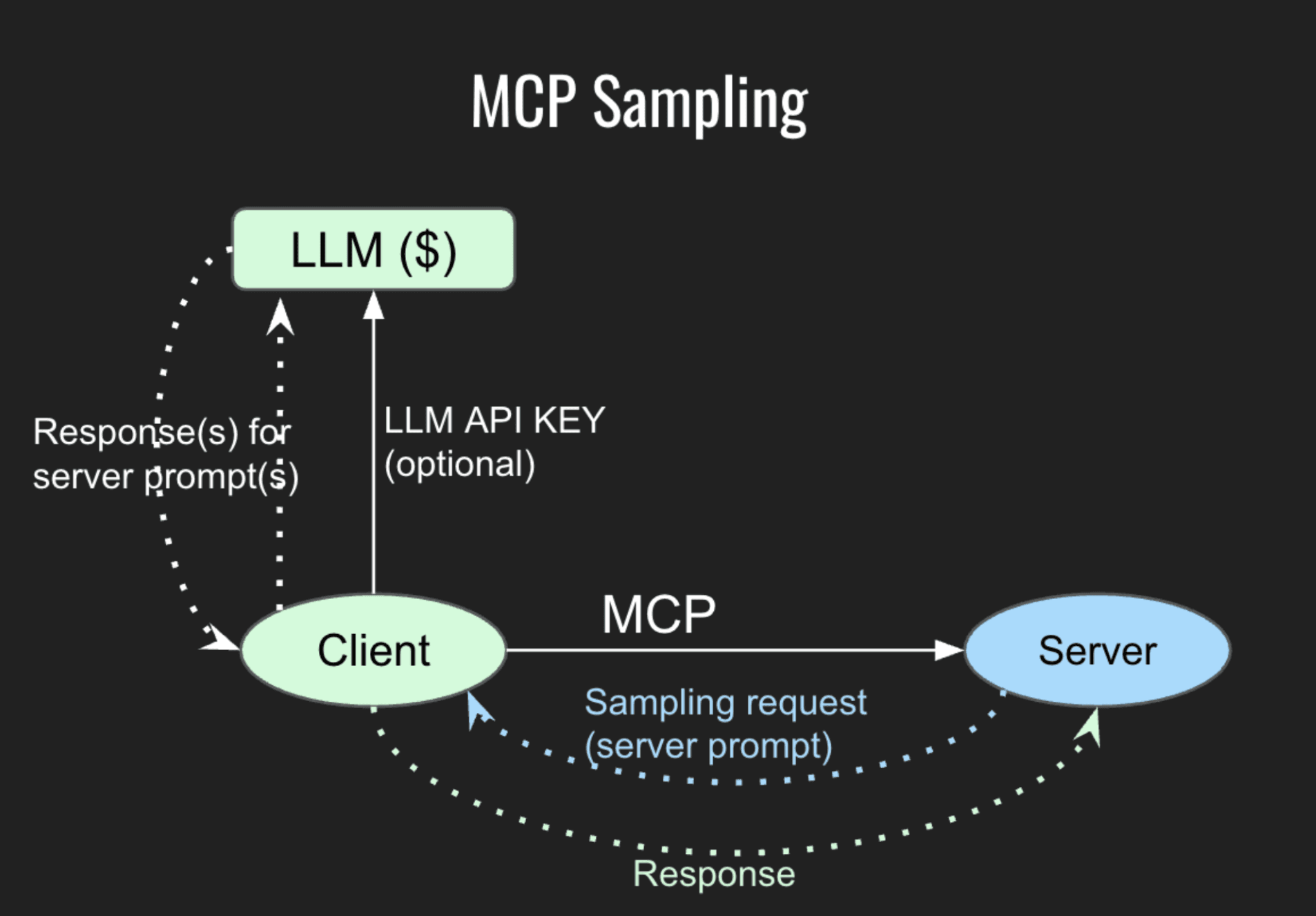

The solution we found was MCP sampling.

The idea is simple but powerful. Previously, our server was making its own (expensive) calls to an LLM provider like OpenAI.

Instead, we package the logic of our "mini-agent" so it can run locally on the user's machine. The tool then routes its requests through the LLM a user’s GitHub Copilot already has access to. The client "samples" the prompts from our tool, gets the responses, and returns them. This makes the process cheaper and more transparent, as all the code is running locally.

Of course, this approach has limitations. The LLM completions provided by tools like VS Code have different context limits than the native models. For example, GPT-5 in VS Code Copilot has a max context of 128k tokens, whereas the OpenAI API version has 400k.

This is why our internal process of HTML simplification is so critical to making the workflow successful.

Introducing Web Scraping Copilot

This work has led us to release Web Scraping Copilot, a free VS Code extension that helps you generate web scraping code with GitHub Copilot.

It streamlines working with Scrapy projects and includes optional integration with Scrapy Cloud, making it easier to deploy and monitor your web scraping jobs.

For us, at Zyte, getting to “simple” was hard. We had to refactor, for Visual Studio Code, a complete project that was already being used locally. We had to face the need to integrate at the same time as adopting.

Now, however, Web Scraping Copilot bundles all our above work, including HTML simplification.

Zyte test developers have reported a huge increase in productivity.

"In the past, when I needed to create a spider from scratch using a standard product schema, it took me eight hours. Now, in two hours I'm done. [Web Scraping Copilot API] makes a massive difference."

Test user, Web Scraping Copilot

Going beyond: The future of agents in web scraping

This is just the start. At Zyte, we are constantly researching and developing to take advantage with the incredible speed of AI innovation. This includes:

Dynamic crawling planner: We're working on a system that can create a plan for crawling complex websites based on a simple user query. This would be a great starting point for agents to then create the spiders.

Improving Web Scraping Copilot: We continue to refine our tool to produce higher quality, more customizable code that adapts to a user's existing codebase and needs.

Adapting our existing tools: We are adapting our powerful open-source tools, like web-poet, to be more usable by LLMs. For example, Zyte's standard data schemas now have an llmHint parameter to provide better guidance to agents.

Alternative approaches: We're exploring alternative ways to structure tools and MCPs with different scopes, allowing for more advanced capabilities like automatically finding the best browser configuration for a given website.

Research drives relevance

Web scraping is hard, even for agents. The challenges are numerous and complex. However, delegating these hard, long tasks to specialized sub-agents or tools is a very effective strategy. We found that trying to make one monolithic agent do everything was less successful than a modular approach.

This field is evolving very, very quickly. New capabilities are possible with every update. It's critical for anyone in this space to stay tuned, play around with new features, and not get left behind.

At Zyte, we are working closely with these new technologies. Our goal is to apply them to automate fully customizable web scraping, tackling the hard problems so you don't have to.