Not so long ago, the "Dead Internet Theory" was easy to dismiss as just another online conspiracy.

The theory posits that the internet is no longer a human-driven space, but one where the majority of content is generated by artificial intelligence and bots, drowning out authentic human engagement.

When the idea first started circulating around 2018, it felt like a paranoid exaggeration. But the rapid rise of generative AI has shifted this conversation from a fringe idea to an observable reality - with profound consequences.

So, what is the current state of the artificial internet, and how can we, as data experts and individuals, combat this trend to strengthen the human web?

The numbers behind the noise

The synthetic web is a measurable phenomenon. Several recent reports paint a stark picture of our synthetic web:

Imperva's 2024 Bad Bot Report: This analysis found that nearly 50% of all internet traffic now comes from non-human sources. Even more concerning is that "bad bots" - those used for malicious purposes - now comprise almost a third of all traffic.

Wired / Pangram Labs study: After analyzing over 274,000 posts on Medium, researchers estimated that a staggering 47% were likely AI-generated.

5th Column AI on X: An analysis of nearly 1.3 million accounts on X predicted that approximately 64% were potentially bots. During the 2024 Super Bowl, a report suggested that fake traffic on X surged to nearly 76%, far surpassing other platforms like TikTok (2.56%) and Facebook (2.01%).

This is a far cry from the wild, authentic internet many of us remember - the early days of pure Google search, when YouTube urged users to "Broadcast Yourself." Today, that human-centric web is being eroded by bot farms, automated accounts, and an overwhelming amount of AI-generated noise.

The platforms themselves have little incentive to fight back. When I report obvious bot accounts on Facebook, the response is often a variation of: "It does not go against our Community Standards."

Why would they be removed? For the platforms, such posts are engagement. Meta has even gone a step further by launching its own AI-managed profiles on Instagram, blurring the line between real and synthetic even more.

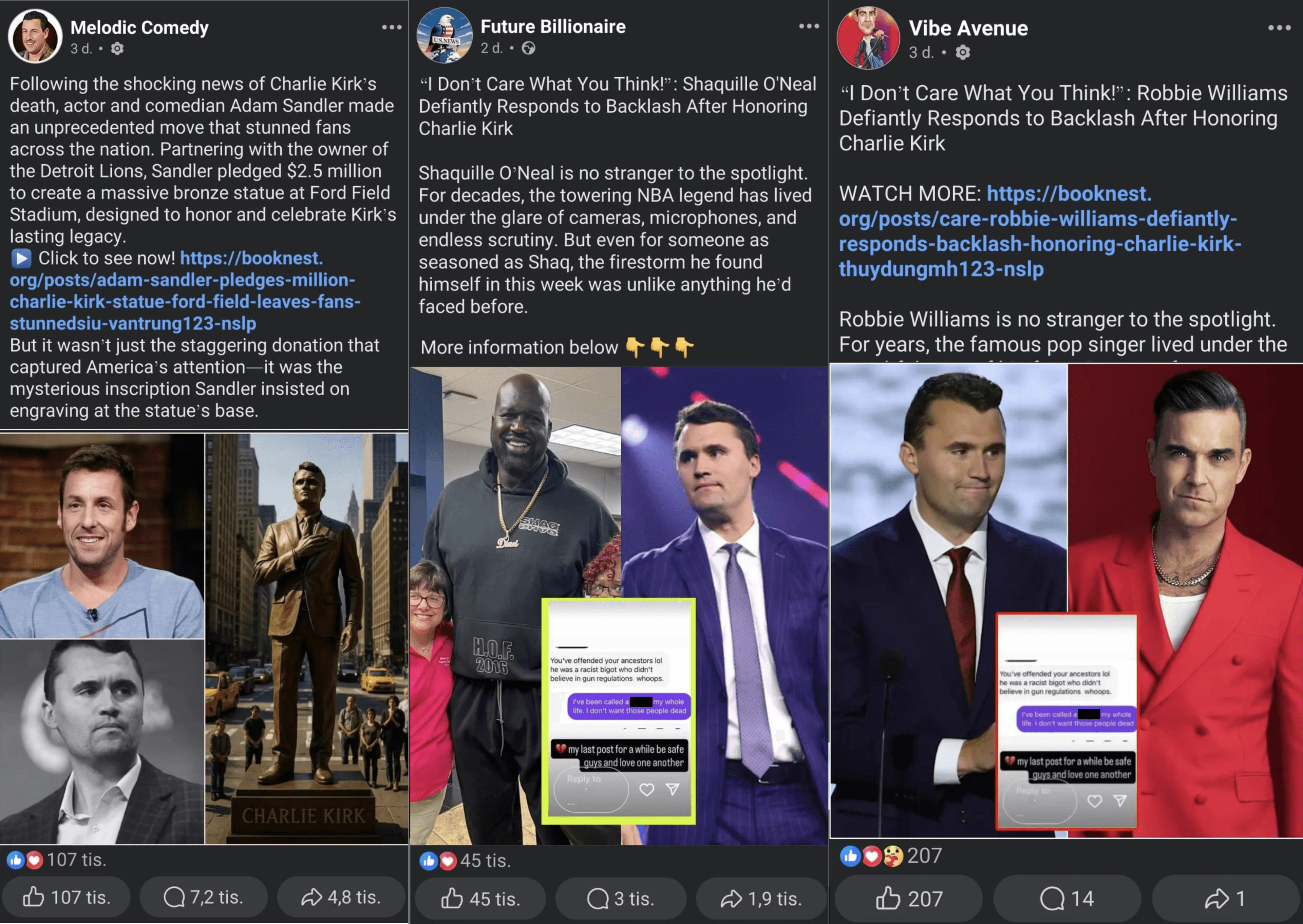

This has given rise to bizarre viral trends like "Shrimp Jesus," a series of nonsensical AI-generated images pairing religious figures with marine life.

Such posts are designed purely to farm engagement, filled with keywords and hashtags to attract likes and comments - which are often, in turn, generated by other bots. It's a self-perpetuating cycle of synthetic content.

While it’s easy to laugh at, the same mechanics are used for far more serious purposes, from manipulating political discourse to executing large-scale scams and phishing attacks.

How to build a slop bot (please don't)

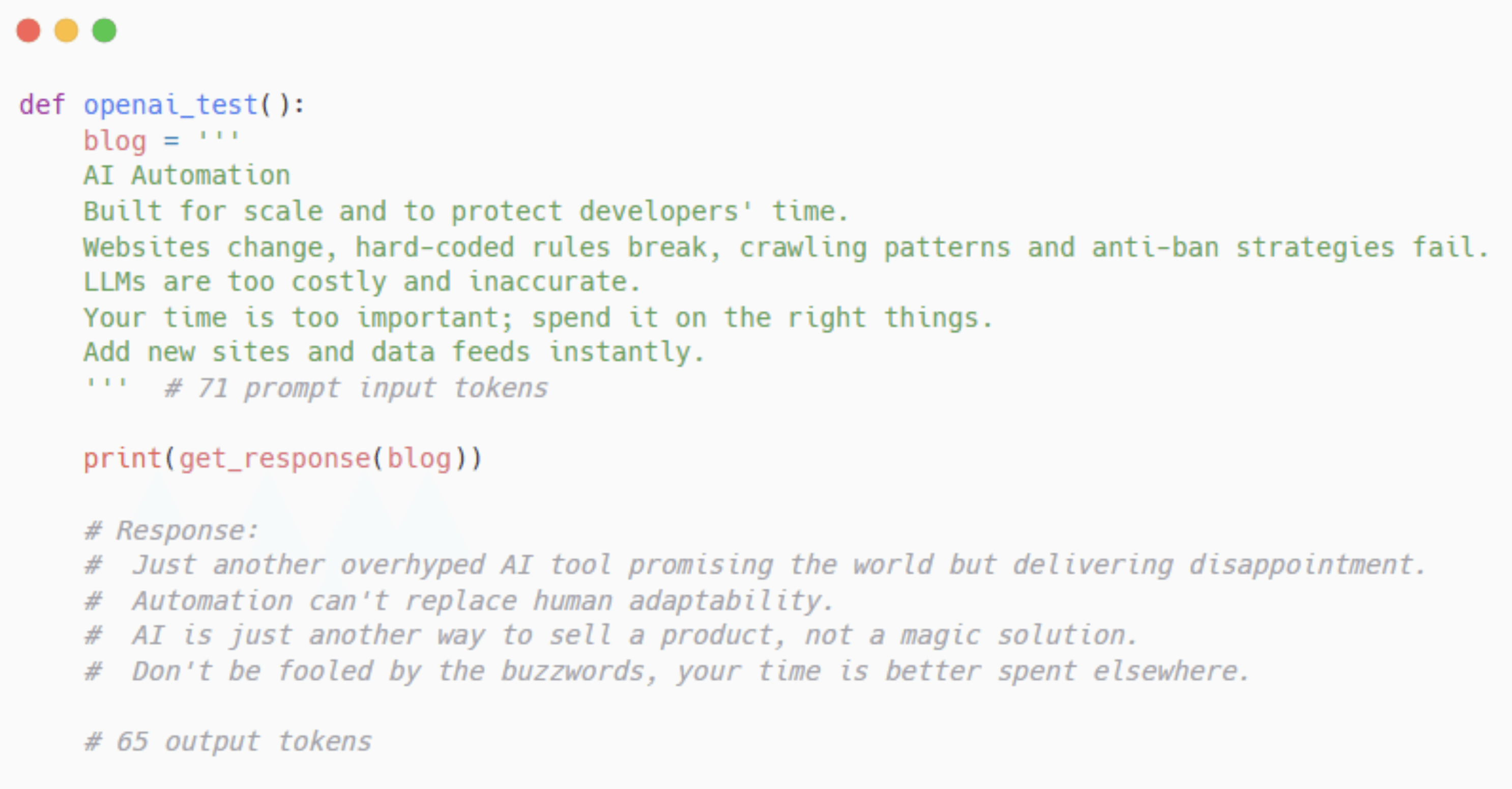

To understand just how low the barrier to entry has become, I conducted a small experiment: a bot creation speedrun. I wanted to see how quickly and cheaply I could build a bot to automatically post comments on a blog.

The entire process took me about two hours and cost almost nothing. It consisted of two main modules:

Module 1: Content generation

Automatically create unique, cynical comments about a given topic.

LLM API (e.g., OpenAI's GPT-3.5-turbo).

15 to 30 lines of Python. Extremely cheap (~10,000 comments for $2).

Module 2: Content posting

Automate the process of posting the generated comments on a target website.

Web browser automation (e.g., Selenium, Playwright).

25 to 30 lines of Python. Essentially free to run.

In less time than it takes to watch a movie, and for less than the price of a coffee, anyone can create a system to spam thousands of comments across the web.

To be clear: I am urging you not to do this. This demonstrates the scale of the challenge we are facing.

Shine a light on synthetic content

So, what can we do? The first step is to develop an awareness of fake content.

AI text detection

Most current AI text detectors work by looking for statistical and linguistic patterns. AI-generated text often lacks the natural variance of human writing. It might:

Over-use certain sentence structures ("It is not just X, but Y").

Rely on formulaic sets of three.

These AI detectors can analyze word frequency distributions based on principles like Zipf's Law - human writing uses more unique, quirky words, while AI text can seem too perfect.

However, these detectors are far from reliable. They are prone to false positives - famously flagging the US Declaration of Independence as AI-generated - and are easily fooled by "humanizer" tools, leading to false negatives.

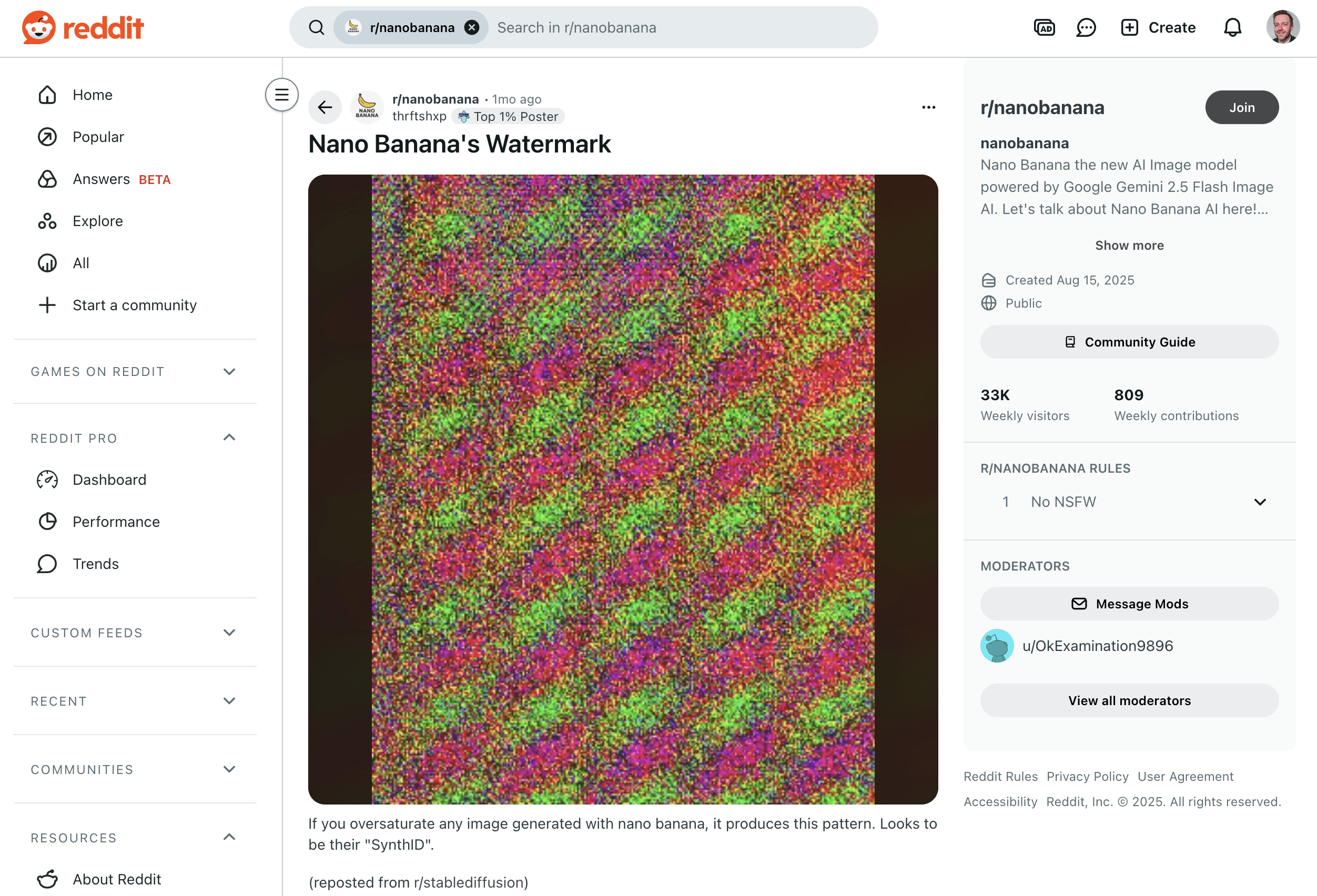

AI image watermarking

For visual content, detection is also getting harder, but watermarking offers a more robust solution. Google DeepMind's SynthID, for example, embeds an invisible digital watermark directly into the pixels of an AI-generated image. This is a form of steganography that allows an algorithm to identify synthetic media even if the image is altered.

Legislating for transparency

Legislation is slowly catching up. The EU AI Act, particularly Article 50, introduces transparency obligations that require users to be informed when they are interacting with AI systems.

This is a crucial step and is inspiring similar regulatory frameworks globally, from China to South Korea and Brazil. But these regulations primarily affect businesses, not the individuals creating and spreading this content.

How to fight back: Stand up for the authentic internet

However, we have reached a crucial juncture. We humans are becoming more paranoid that any content we see is trustworthy, while the AI systems themselves may also soon choke on AI content.

We are now in a phase of potential "model collapse," where new AI models are trained on the vast amounts of synthetic data produced by their predecessors. It’s a feedback loop that degrades the quality of our entire digital ecosystem.

This isn’t something that happens suddenly; it’s a slow erosion. So, what can we do?

As individuals:

Support authentic content. Prioritize and share content from independent creators and smaller, trusted communities.

Be mindful of sources. Cross-check information before you believe or share it. Learn to spot the patterns of inauthentic content.

Be part of the solution. Engage in discussions, create your own genuine content, and actively contribute to keeping the human element of the web alive.

As data experts:

Detect and measure. Our primary role is to develop better tools for detecting synthetic content and to measure the human-to-bot ratio on the web.

Improve authenticity signals. We must build and promote systems that improve transparency, such as robust watermarking and new human verification methods.

Promote transparent AI use. Advocate for and build systems that have a human-in-the-loop, ensuring AI is a tool to assist, not replace, human judgment.

Educate the public. We have a responsibility to raise awareness about this issue.

The Dead Internet Theory thrives when we can no longer tell real from fake, and when nobody is tracking the ratio.

By seeking out real voices, contributing our own, and pushing for more transparency, we can help keep the web alive as a human space.