Is your data pipeline built on “vibes”? Is your scraping operation an unclear series of unfolding actions, understood only by its lead author?

When rookie software developers encountered frustrating results from conversational AI coding in early 2025, experienced peers recommended adopting an old trick - the Product Requirements Document (PRD).

A PRD is a comprehensive specifications document that outlines the features, functionality, and requirements of a product, serving as a guide for product development.

Developers now recognize the value in providing their AI code assistant or human team with such a document because, the moment a project graduates from being one person’s mere idea, it demands an upfront and clear articulation of intentions and aims. That is how you alleviate errors and time-consuming misinterpretations.

In my years in web scraping, I never met a web scraping project that wouldn’t have benefited from the same kind of clarity. So, how can data engineers apply the same approach to the craft of data gathering?

Data is your product

PRD 101

While specification documents have long been part of software development, the modern PRD took shape in Silicon Valley in the 2000s as a response to the static and exhaustive documents typical of “waterfall” software development.

The software product manager (PM) – itself, a new discipline – usually owns the document. She is responsible for ensuring it includes both input from and instructions to team members.

There is no universally-agreed format, but a typical PRD contains the following elements:

Problem statement.

User stories.

Functional and non-functional requirements.

Design and technical considerations.

Milestones.

Assumptions.

Dependencies.

Success metrics.

No two PRDs are the same, but here is a classic example of the format:

(Source: Kevin Yien’s (Square) PRD template)

Product documents like this have become integral to modern software development practices. But what about web scraping?

Spec docs for web scraping

While they may not feel like it, web scraping engineers are essentially the “product owners” of their company’s data extraction pipeline. Unlike in software development, for them, the deliverable is data.

Whether you are a solo team calling on AI code assistants to write spiders or part of a multi-person engineering crew working with business colleagues, your data gathering system deserves the same clarity and rigor as any other product.

Research by MIT Center for Information Systems Research found that organizations which apply product management practices to data – treating data as a valuable offering, with a lifecycle of its own – are better able to make the most of their data assets, creating accountability for delivering value over time.

Fran Muñoz, Zyte Data’s Head of Delivery, can vouch for that. “We can’t think of a single data project that didn’t benefit from adopting a product mindset,” he says. “Going from thinking, ‘How can we get the data?’ to, ‘How can we make sure the data we deliver solves this exact business problem? ’ is a game changer.”

Here is how a document like this helps Fran and others like you with web scraping:

Collect what’s correct: Without clear intention, teams risk scraping what’s available instead of what’s needed. Noting target data in a document like a PRD prevents pristine-but-pointless datasets.

Shine a light on dependencies: Overlooking libraries required by your process could fail the whole pipeline. PRDs ensure crucial tool dependencies are made clear from the start.

Ensure data quality: Integrity issues go unnoticed if required fields, validation rules and success metrics are not defined. PRDs support the creation of rigorous test plans.

Keep scope on a rope: timelines and budgets are at risk when stakeholders aren’t aligned on scope. PRDs make trade-offs and new requests easier to consider, because the baseline scope is agreed by all.

Anatomy of a web scraping PRD

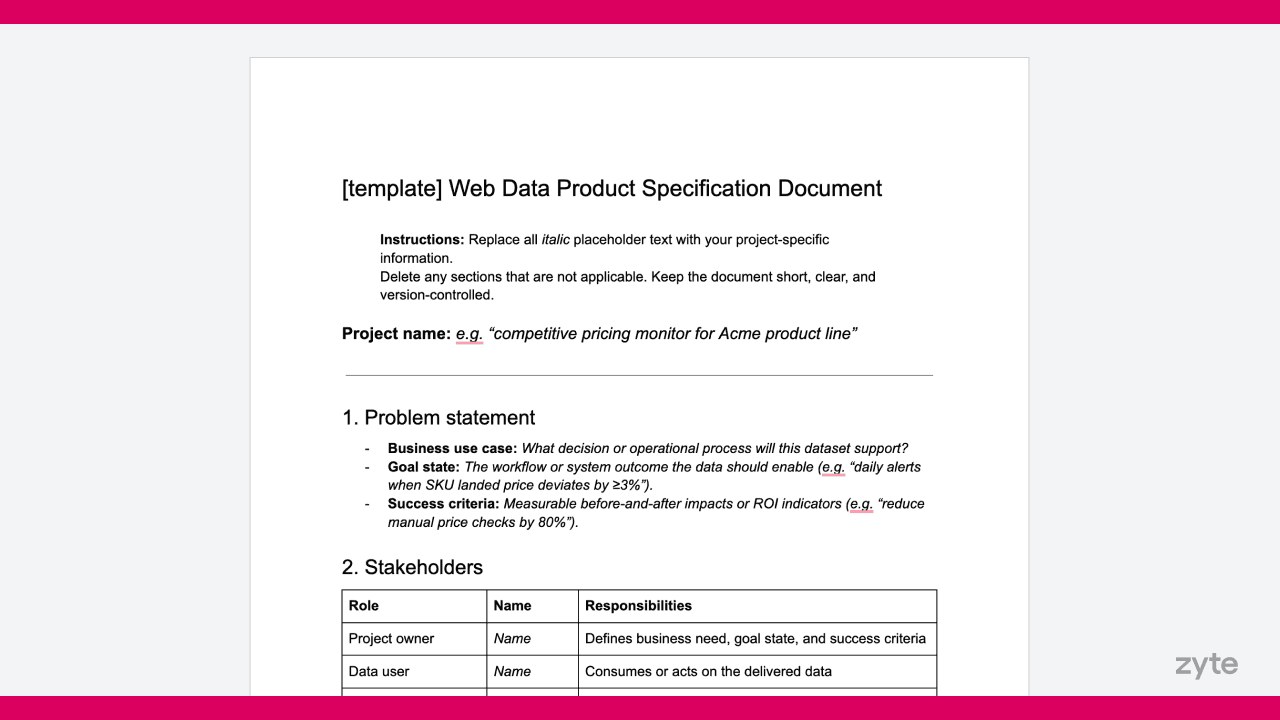

A robust web scraping PRD has at least four key elements:

Problem statement: Why the data is needed, what it should enable, and how success will be measured.

Stakeholder map: Roles, responsibilities, and approval paths.

Technical requirements: Guardrails, dependencies, and timelines.

Implementation details: Data sources, field schema, cadence, and formats. What’s in and out-of-scope.

Each element builds upon the previous. When stakeholders agree on the problem, the requirements are validated. With valid requirements, implementation lands on time and on budget.

With this document, you’re ready to take the first step toward managing web scraping projects like a product.

Writing your first web scraping PRD

If you are keen to adopt this approach for your scraping projects but don’t know where to start, consider these tips.

1. Paint a picture of the problem

Mid-project changes of scope are an engineer killer. But, often, changes of plan arise due to poor initial articulation of the project’s aim.

This makes an effective problem statement critical. So, when drafting your document’s problem statement, bear this in mind:

Anchor the dataset to your broader business needs. Why is the data necessary? Whether it is “Enabling pricing teams to monitor competitors more effectively” or “Helping operations detect product stock-outs faster”, always state the ultimate application for the extracted data.

Express the goal state. Imagine your data, alive in a user’s resulting workflow. Where does it fit in? “A weekly spreadsheet with the list of product prices” and “Daily automated alerts when an SKU’s total price deviates by ≥ 3% from our own” are quite different, so be clear.

Agree on success criteria. What is the resulting impact of your data? Whether it is a margin improvement, reduction in manual work hours or optimizing ad spend, the material gain is an important context that helps a team fully understand the data project.

2. Assign responsibilities to people

Misaligned ownership is one of the fastest ways to derail a timeline and blow out a project’s scope. So, clearly express who is responsible for what.

Typical roles on a scraping project may include data user, project owner, compliance officer, and technical lead. One person can wear several hats, but responsibilities must be clear so nothing falls between the cracks.

3. Design the technical guardrails

Unlike most software products, which largely operate in a constant, controllable environment, web scraping projects are volatile. Websites can adjust their structure or tighten access overnight. Your data pipeline must be designed for breakage, budgeted for maintenance, and monitored for recovery.

Map every dependency – from the frameworks and infrastructure your crawlers run on, to the source files, storage solutions, and downstream tools they connect to – so that your data can flow with a proper recovery plan for each.

4. State implementation details thoroughly

With the goals agreed and guardrails in place, you can now specify what will be built and how exactly they should behave.

When data sources are not fully documented, you could be collecting incorrect data from the wrong pages. List each source with crawl type, delivery frequency, geography, and required navigation actions so you get the right data at the right frequency.

Data quality issues creep in when schemas aren’t locked in. Write down sample values and required transformations so engineers and QA test against the same rules.

The great specification

Great prompts, great products, great datasets – they all start with the same thing: unambiguous intent.

Great specifications help manage great expectations. Write it, share it, keep it alive.

Don’t power your extraction with vibes alone.