From a startup hack to the world’s most-used open-source web scraping framework.

When Scrapy 1.0 launched in 2015, it marked a turning point — not just for the tool itself, but for an entire community of developers scraping the web for structured data.

Built out of necessity inside a startup and shared with the world through open source, Scrapy has since become the foundation for thousands of daily crawlers, pipelines, and tools used in production.

To celebrate a decade of Scrapy 1.0, we’re revisiting its journey across four key chapters.

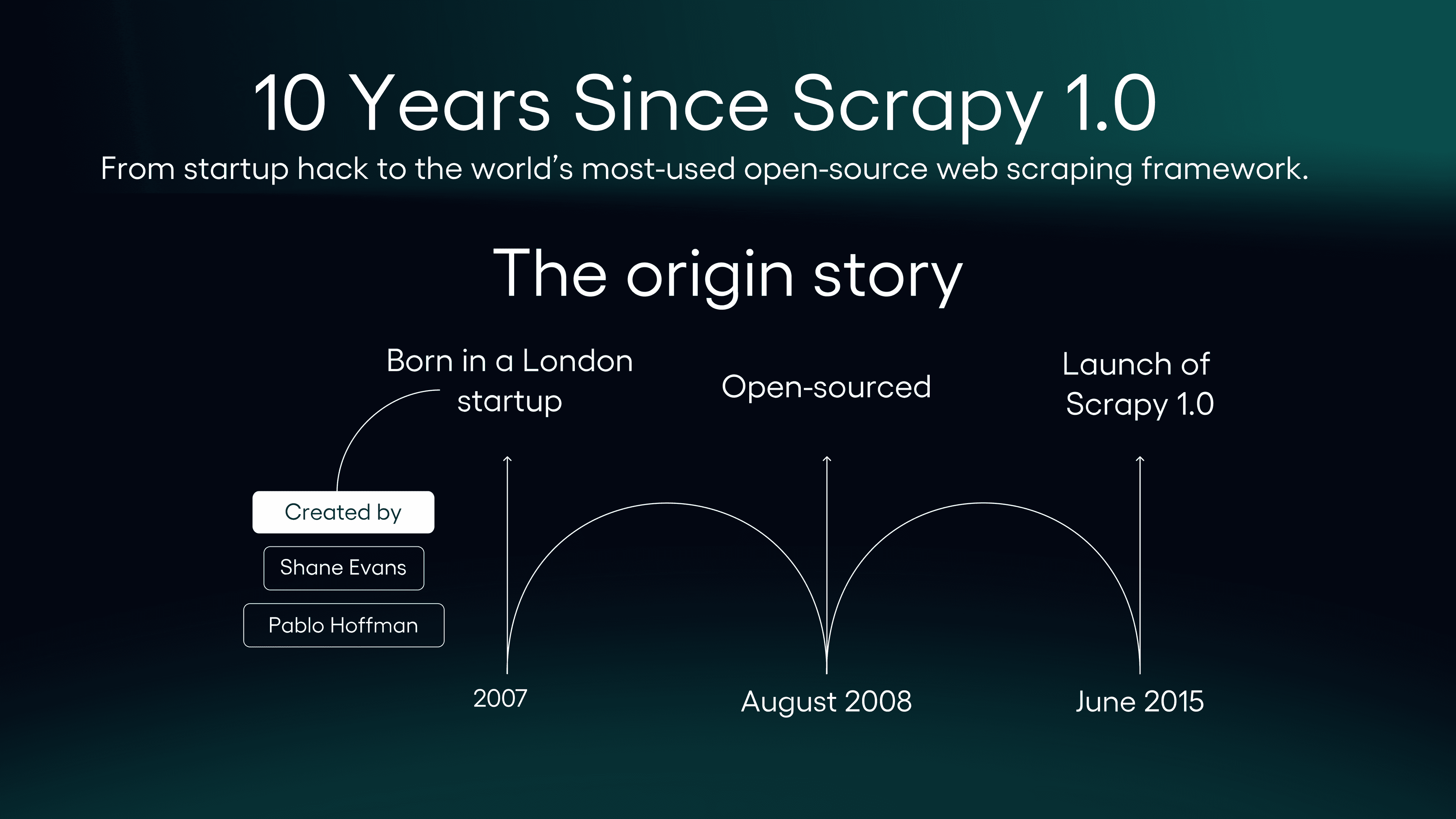

How it all started

Scrapy was born in 2007, inside a London startup that needed structured data from across the web. What started as a practical internal tool grew into a framework — designed to help developers focus on what mattered, while abstracting away the crawling infrastructure.

Shane Evans and Pablo Hoffman shaped the project from the start, working together to expand its architecture and improve its usability. In 2008, they open-sourced it under the BSD license, a decision that opened the door for community growth and long-term evolution.

For the full backstory — from early architecture to the moment Scrapy became open source — read the full origin story.

Growth by the numbers

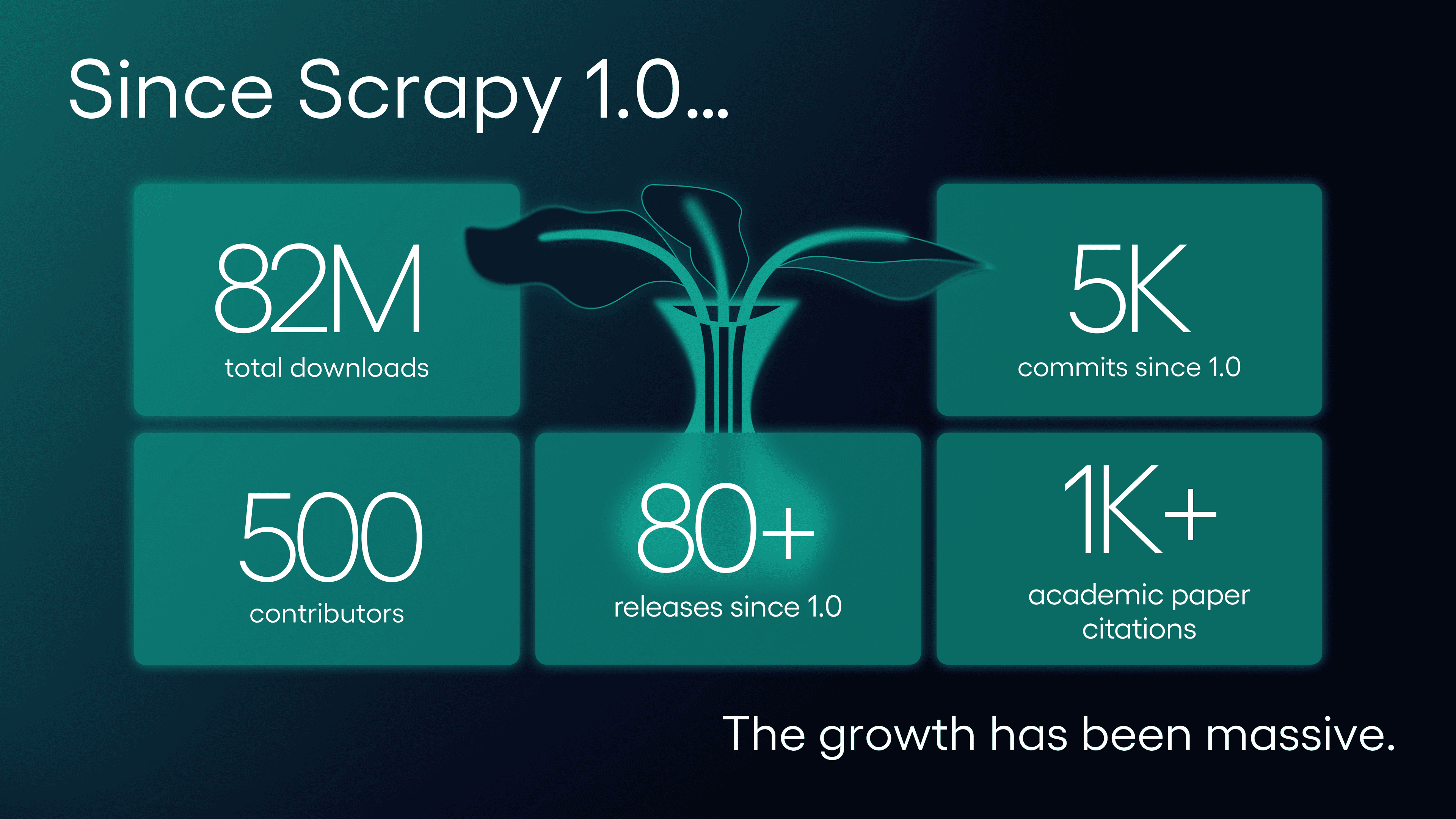

In the ten years since version 1.0, Scrapy’s adoption has surged — driven by its extensibility, performance, and growing ecosystem. Developers have downloaded it over 82 million times.

Its GitHub repository has seen over 5,000 commits since the 1.0 release — and nearly 11,000 commits in total — contributed by more than 500 developers across the full history of the project, with over 80 official releases.

Scrapy’s impact goes beyond GitHub stats. It’s cited in over 1,000 academic papers, supports developers in more than 41 countries, and has been the foundation for companies, research projects, and market monitors.

We’ve compiled some of the biggest moments and stats in a single visual snapshot:

How Scrapy evolved under pressure

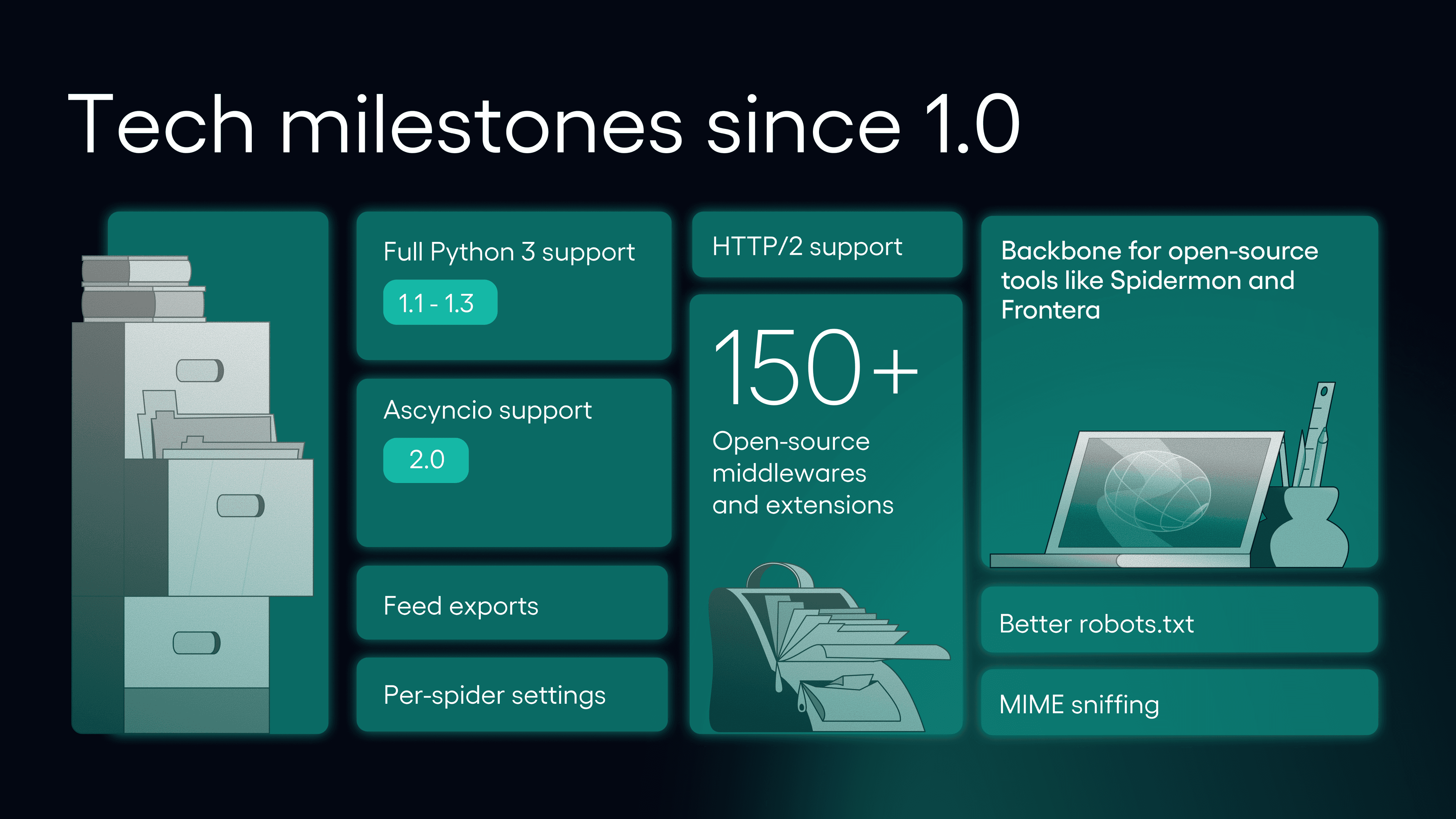

Scrapy’s strength lies in its architecture — but also in its ability to evolve. The port to Python 3, started in version 1.1 and finalized over multiple releases, took years of careful refactoring. Twisted remained a strong foundation, but starting with version 2.0, developers also had the option to run Scrapy with Python’s asyncio, unlocking new integration paths and modern workflows.

Other improvements followed: better feed exports, per-spider settings, more robust robots.txt parsing, MIME sniffing, and HTTP/2 support. Many of these came from the community. Others were shaped through real-world use at scale. But Scrapy never strayed from its original vision. It does one thing — web crawling — and does it well. Everything else lived as middleware, plugins, or separate tools.

A framework carried by its community

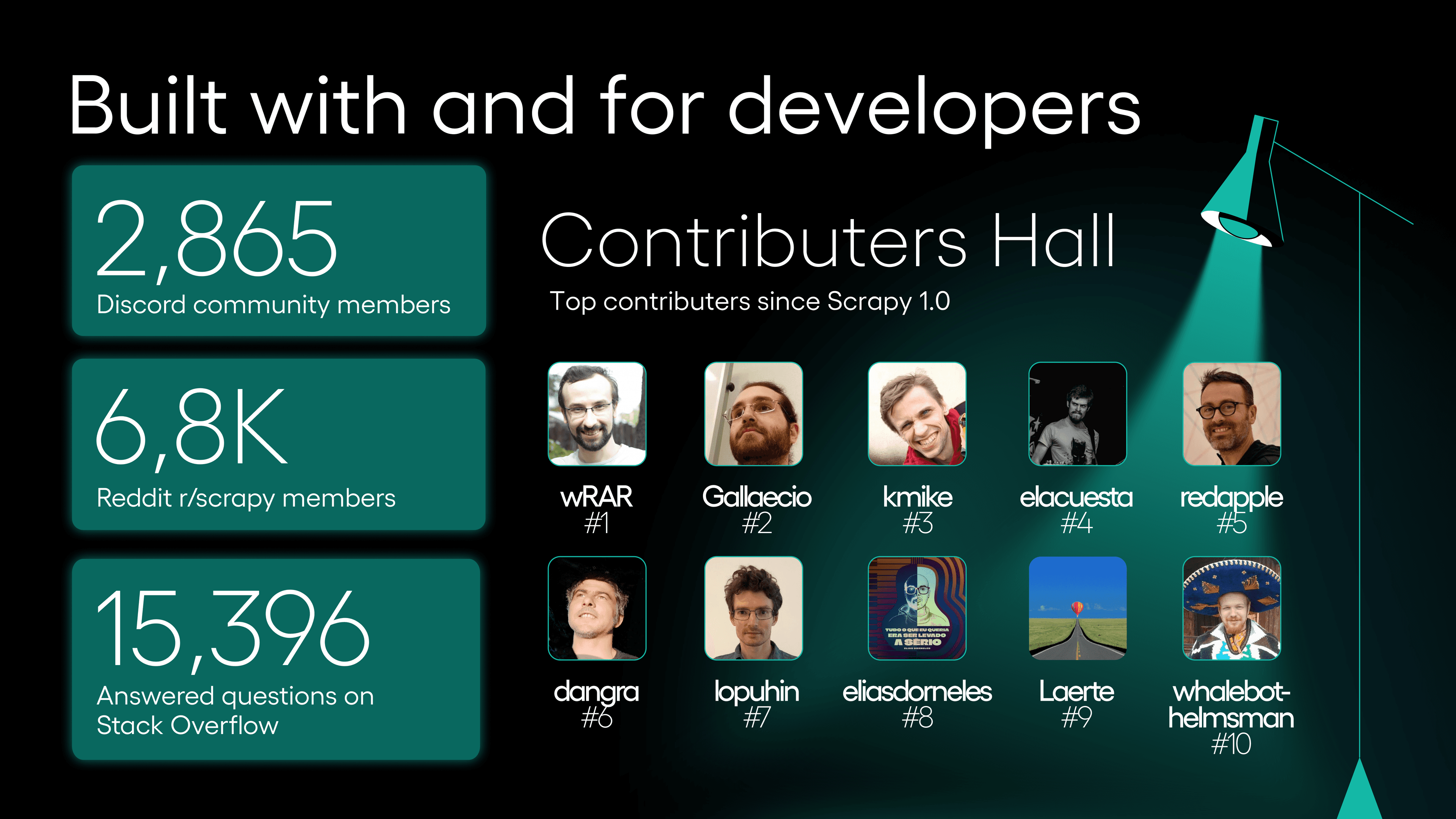

The heart of Scrapy has always been its contributors. Whether through GitHub issues, pull requests, Discord threads, or Stack Overflow answers, developers have helped shape the framework one patch at a time. Scrapy’s plugin ecosystem has flourished, and dozens of complementary projects — from Spidermon to Frontera — have emerged from community needs.

Many early users became long-term maintainers. Some joined Zyte, the company that now stewards Scrapy’s development. Others continued contributing from around the world. From Google Summer of Code students to enterprise data teams, the Scrapy community spans a wide range of use cases — and a shared commitment to open, reusable tools.

Ten years after version 1.0, Scrapy remains one of the most downloaded, forked, and trusted scraping tools in the Python ecosystem. As always, its future will be written by the people who build with it.

What comes next

The web continues to evolve — and Scrapy is evolving with it. From dynamic content and JavaScript-heavy sites to new unblocking strategies and data ethics, the challenges of web scraping have never been more complex. But the foundation remains the same: a focused, modular, open-source framework built to adapt.

Whether you’ve contributed to Scrapy’s codebase, built production crawlers with it, or just spun up your first spider — this anniversary is for you.